If your AI initiative looks like “We’ll call the model from this service”, you’re not integrating AI. You’re adding a very expensive, probabilistic dependency into a deterministic system.

I’ve seen multiple architectures where AI was “successfully integrated.” The pattern was always the same: a new microservice wrapping a model API, wired into an existing request/response flow. It worked in staging. In production, it amplified latency, introduced non-determinism, and quietly inflated OpEx.

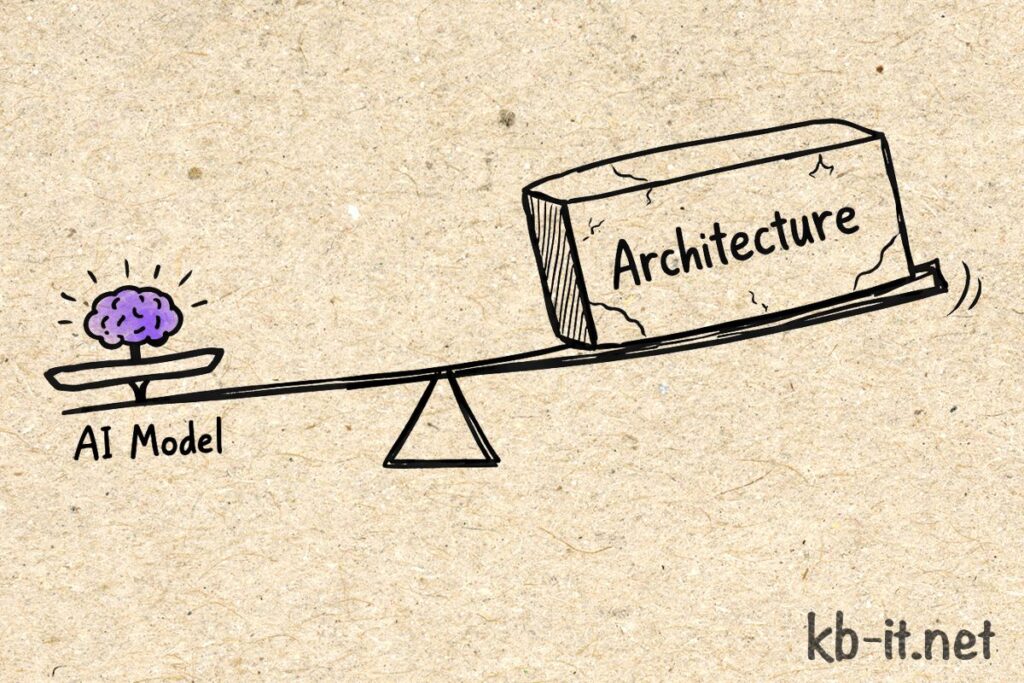

The problem isn’t the model. The problem is trying to graft AI onto an architecture that was never designed for it.

AI is not a feature. It is an architectural force multiplier. And multipliers amplify weaknesses.

Quick summary (for busy readers)

- AI violates core architectural assumptions: determinism, stable latency, and predictable cost.

- Wrapping AI in a microservice does not isolate its risk.

- Synchronous integration is the most common failure pattern.

- AI requires economic circuit breakers, not just autoscaling.

- Deterministic and probabilistic domains must be separated.

- Redesign means accepting reduced control and engineering around it.

The Core Mistake:

Treating AI as Just Another Service

Most distributed systems are designed around three assumptions:

- Deterministic outputs

- Predictable latency

- Stable cost per request

AI violates all three.

When you insert a large language model or ML inference endpoint into a synchronous flow, you introduce:

- Variable latency (cold starts, token length variance, queue contention)

- Non-deterministic outputs

- Cost tied to input size and usage patterns

You don’t “add” something like that. You redesign around it.

The Three Architectural Illusions

Illusion 1: “It’s Just Another API”

A traditional API:

- Returns deterministic responses

- Has bounded execution time

- Fails explicitly

An AI endpoint:

- Produces probabilistic outputs

- May degrade in quality without failing

- Can hallucinate confidently

Architectural implication: You cannot treat AI responses as authoritative truth inside a transactional workflow. If your order-processing flow blocks on a generative AI call for product classification, you’ve just tied revenue to a probabilistic function.

Redesign principle: AI outputs should influence decisions, not directly execute them.

Illusion 2: “We’ll Just Scale It”

AI scaling introduces:

- GPU allocation constraints

- Token-based billing volatility

- Throughput degradation under context growth

Scenario:

A team once embedded document summarization into a customer portal. Everything worked until users started pasting 40-page PDFs. Token usage multiplied cost per request by 6x within days.

If you do not design economic constraints at the architectural level, you are trusting user behavior to stay rational.

That’s not a strategy.

Redesign principle: AI systems require economic circuit breakers not just autoscaling.

Examples:

- Hard token limits or budgets per tenant or environment.

- Fast token estimation mechanisms before hitting the model.

- Fallback to cheaper models under load.

- Context compression pipelines.

Illusion 3: “We’ll Wrap It in a Microservice”

Wrapping AI inside a microservice does not isolate its complexity. It pushes uncertainty deeper into the system.

Common symptoms:

- Retry storms due to transient inference failures

- Latency propagation across synchronous chains

- Observability blind spots (you log HTTP 200, but quality is degrading)

You don’t need another microservice. You need a new interaction model.

What Redesign Actually Means

Redesign does not mean rewriting your platform. It means changing architectural posture around four core areas.

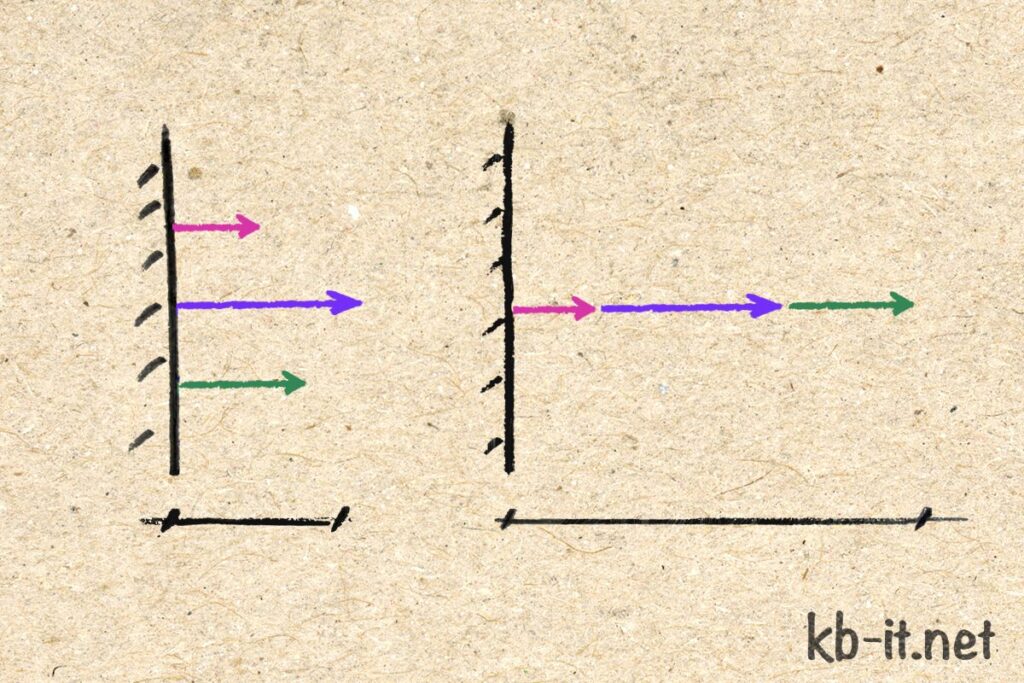

A. Move From Synchronous to Asynchronous by Default

Most AI integrations fail because they assume request/response is sacred.

Instead:

- Queue AI tasks

- Allow partial responses

- Accept eventual consistency where possible

- Separate user interaction from heavy inference

Mental model:

Treat AI like a background analyst, not a blocking function.

When we redesigned a document processing system this way, perceived latency dropped even though actual processing time remained the same.

B. Separate Deterministic and Probabilistic Domains

Do not mix business critical logic with probabilistic outputs.

Create boundaries:

- Deterministic core: billing, compliance, state transitions

- Probabilistic layer: classification, summarization, recommendations

The deterministic layer must validate, constrain, or post-process AI outputs.

This prevents:

- Hallucinated database writes

- Invalid state transitions

- Regulatory exposure

C. Design for Model Evolution

AI components evolve faster than traditional services. If your architecture assumes:

- One model

- One embedding strategy

- One prompt structure

Redesign patterns:

- Abstract model providers behind capability interfaces

- Externalize prompts/configuration

- Version embeddings and schemas explicitly

Models are volatile dependencies. Treat them like third-party infrastructure, not internal code.

D. Build Observability Around Quality, Not Just Uptime

Traditional monitoring answers:

- Is the service up?

- Is latency acceptable?

AI monitoring must answer:

- Is output quality degrading?

- Is distribution shifting?

- Are hallucination rates increasing?

If you don’t track semantic drift, you are operating blind. This is where many “working” AI systems quietly deteriorate for months.

The Mental Model:

AI as a Volatile Core

Think of traditional architecture like steel beams. AI is not steel, it’s reinforced glass, powerful, transparent, valuable but brittle under incorrect load assumptions. If you embed reinforced glass into a structure designed only for steel, stress fractures appear.

Redesign means:

- Adjusting load distribution

- Adding structural buffers

- Accounting for material properties

AI changes material properties of your system.

Signs You Haven’t Redesigned (You’ve Just Added AI)

- AI calls sit inside synchronous business flows.

- There is no fallback mode.

- Costs are monitored monthly, not per request.

- Outputs are trusted without validation.

- Model changes require code changes.

If this describes your system, you haven’t integrated AI.

You’ve increased systemic risk.

Conclusion:

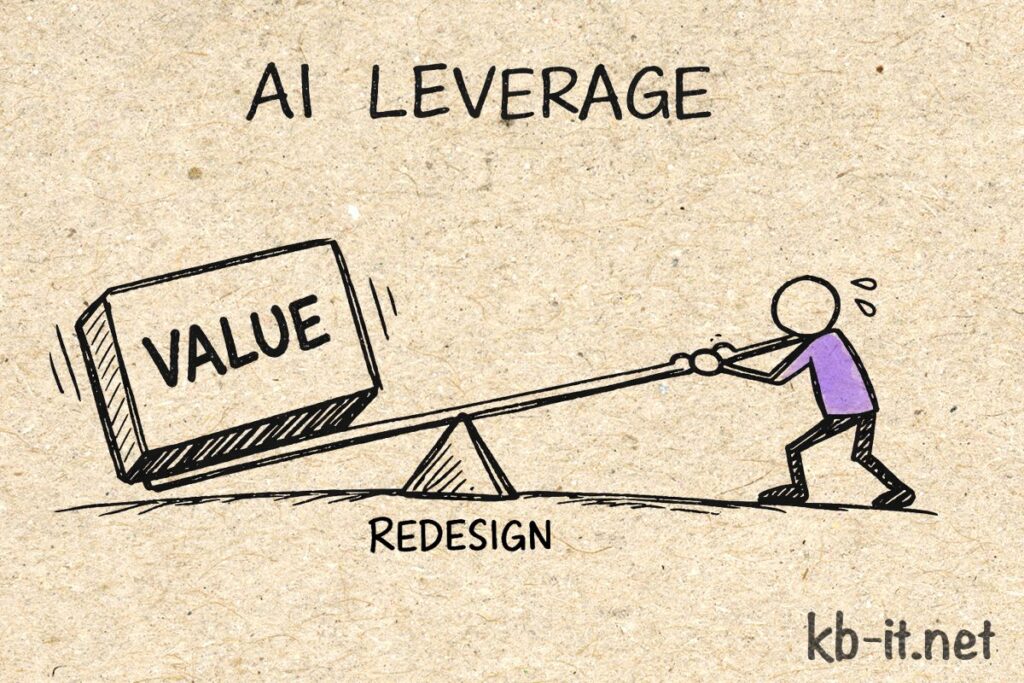

AI Demands Architectural Humility

Stop adding AI to your architecture.

Redesign around:

- Probabilistic outputs

- Economic volatility

- Latency variability

- Continuous evolution

You must recognize that AI is not a plug-in capability. It alters system dynamics at a structural level.

When you redesign intentionally, AI becomes leverage.

When you bolt it on, it becomes technical debt with a GPU bill attached.

Key Takeaways:

- AI violates deterministic architectural assumptions.

- Synchronous integration is the most common failure pattern.

- Economic circuit breakers are architectural, not financial afterthoughts.

- Deterministic and probabilistic domains must be separated.

- Observability must include quality and drift metrics.

If you’re leading AI initiatives, don’t ask:

“Where do we call the model?”

Ask instead:

“What must change in our architecture because we no longer control the output?”

That question changes everything.